AI-driven cyberattacks are reshaping the threat landscape. By using machine learning and advanced algorithms, attackers can automate, adapt, and customise their campaigns faster than ever. This article breaks down how these attacks work, the main types you should watch out for, and simple steps you can take to protect yourself.

What Makes an Attack “AI-Driven”?

At its core, an AI-driven cyberattack uses AI or machine learning to automate or enhance various stages of an attack. Instead of manual reconnaissance and trial-and-error, attackers employ algorithms that learn from data, adapt in real time, and evade traditional defenses. They scan for vulnerabilities, craft personalised phishing emails and messages, and even refine their tactics on the fly—all without human intervention.

Why does this matter? Because automation and learning speed up attacks and make them more precise. An attacker can target high-value individuals or systems in minutes, rather than days.

Key Characteristics of AI-Driven Threats

- Attack Automation: AI scripts launch attacks at scale, reducing the need for hands-on effort.

- Efficient Reconnaissance: Algorithms scrape public data—social media, corporate sites—to pinpoint weak spots instantly.

- Hyper-Personification: AI generates bespoke messages or multimedia content for each target, boosting success rates.

- Real-Time Adaptation: Through reinforcement learning, attacks evolve to avoid detection by security tools.

- High-Value Targeting: AI models analyse user roles and behaviours to single out individuals who can open the biggest doors.

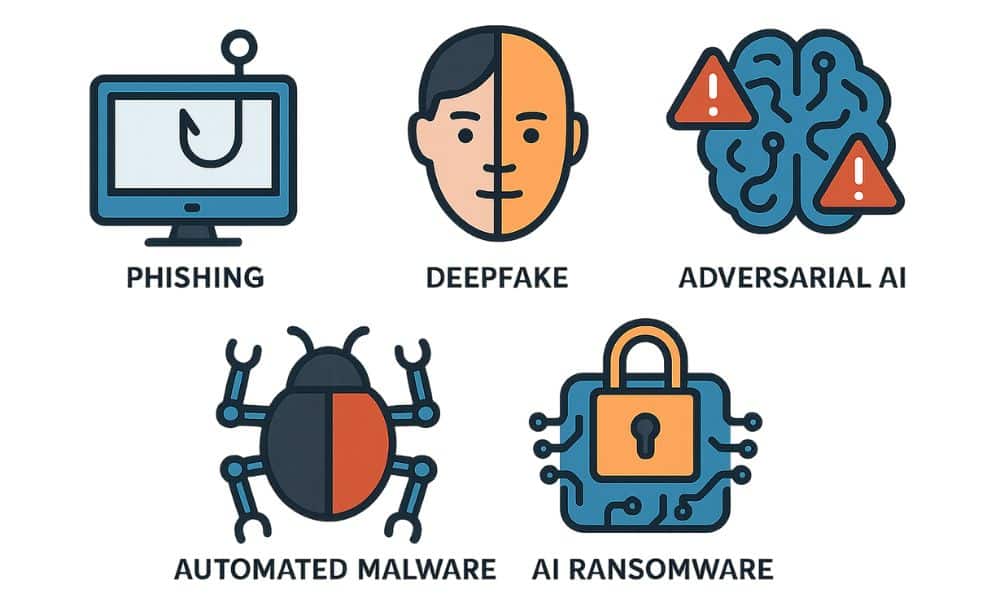

Most Common Types of AI-Driven Cyberattacks

1. AI-Powered Phishing and Social Engineering

Imagine a phishing email that addresses you by name, references your latest projects, and mimics your manager’s tone perfectly. Attackers use generative AI to craft these ultra-realistic lures. They even deploy chatbots that respond in real time, luring you into sharing credentials or clicking malicious links.

2. Deepfakes for Deception

Have you seen those eerily realistic fake videos or voice clips? Deepfakes fit right into social-engineering schemes. An attacker might send a call appearing to come from your CEO, urging you to wire funds quickly. It sounds unbelievable—but deepfakes make it frighteningly easy.

3. Adversarial AI Attacks

Here, attackers target the AI models themselves. They poison training data or feed tweaked inputs that fool detection systems. In poisoning attacks, they corrupt the model’s learning material; in evasion attacks, they subtly alter inputs so security tools misclassify threats as harmless.

Malicious GPTs and Automated Malware

Some threat actors repurpose open-source GPT models to generate new malware strains or phishing campaigns. They tweak prompts to spit out code for custom exploits or draft fake web pages to harvest credentials, all without writing a single line of code themselves.

1. AI-Enhanced Ransomware

Ransomware gangs now use AI to map networks, identify critical assets, and optimize encryption strategies. They can adapt encryption keys or mutate payloads to evade antivirus scanners—making each attack more potent than the last.

2. Real-World Examples of AI-Driven Attacks

- DeepLocker (2018): IBM researchers unveiled this proof-of-concept malware that stays dormant until on-device AI recognizes specific biometric or geolocation triggers, then unleashes its hidden payload.

- AI-Enhanced Phishing Campaigns (2024): Attackers used generative AI to mimic executives’ writing styles, leading to several data breaches when employees clicked on convincing malicious links.

- Deepfake CEO Fraud (2023): A European energy firm lost €220,000 after scammers used a deepfake voice clip of their CEO to command an urgent funds transfer.

- Adversarial Poisoning of Security Models (2023): Threat actors subtly tainted open-source malware-detection datasets, causing some AI-based antivirus tools to misclassify real malware as safe software.

- AI-Driven Ransomware Reconnaissance (2025): A ransomware group deployed machine-learning scripts to map corporate networks and pinpoint backup servers, accelerating their encryption attacks and maximising impact.

3. Simple Steps to Defend Against AI-Driven Attacks

You don’t need a PhD in data science to protect yourself. Here are straightforward actions you can take:

- Enable Multi-Factor Authentication (MFA): Even if an attacker steals your password, MFA adds a critical hurdle.

- Verify Unexpected Requests: If your “CEO” calls asking for wire transfers, double-check via a known channel.

- Keep Software Updated: Patches often close vulnerabilities that AI scans target first.

- Use AI-Enhanced Security Tools: Modern defences leverage AI to detect anomalies that static rules miss.

- Train Your Team: Regularly simulate phishing exercises so everyone spots AI-crafted scams.

By adopting these steps, you close gaps that attackers exploit with their algorithms.

Real-World Anecdote: Spotting a Deepfake

A marketing director once received a video call seemingly from the company’s CFO. The voice and mannerisms matched perfectly. Only after asking an off-the-wall personal question—“Who won the office horseshoe tournament last month?”—did she realize the deepfake got that detail wrong. She ended the call and reported it immediately. A simple verification step saved her from a costly error.

The Future: Staying Ahead of the Curve

AI will continue to fuel both attacks and defences. Gartner predicts that by 2026, 75% of security operations teams will use AI assistants to accelerate threat hunting and incident response. Staying informed and adopting AI-powered defences ensures you’re not left behind in this arms race.

Conclusion

AI-driven cyberattacks grab headlines because they’re faster, smarter, and more elusive than ever. But you can fight back with awareness, strong security practices, and tools that leverage the same AI capabilities to defend your data. By understanding how these threats operate—and taking simple, proactive steps—you’ll keep attackers at bay and protect what matters most.