Kaspersky said something that really surprised me, and honestly, shocked me: “There’s never been a better time to build software than right now, and at the same time, never a more challenging time to keep it secure.”

That’s not just hype—it’s the hard truth.

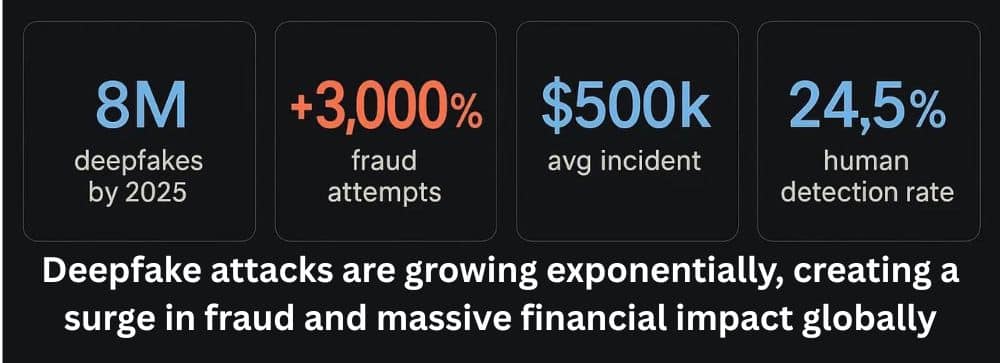

Deepfakes aren’t theoretical anymore. They’re active threats hitting development teams, and they’re getting smarter about it. AI-based attacks bypass human intuition. They deceive the people you trust; they compromise your development pipelines faster than anything we’ve seen before. Enterprises worldwide are struggling with deepfake attacks that bypass traditional security.

The $500,000 Reality:

We’re living through a surge right now. In just the first three months of 2025, deepfake incidents already matched the entire previous year, with over $200 million in losses in Q1 alone.

And here’s the thing that keeps security teams up at night: nearly half of all code generated by AI tools has security problems. If your developers are using AI coding assistants—and most are—almost half of what those tools suggest is vulnerable to attack.

It gets worse. Six months ago, we saw around 1,000 security issues per month from AI-generated code. Now we’re hitting 10,000 monthly. The tools got faster, but they also got way more dangerous at the same time.

Here’s what attackers are actually doing these days: they use multiple methods at the same time. You get a deepfake video call that sounds exactly like your CTO asking you to deploy code. You also get vulnerable code from an AI tool. And you get a backdoor hiding in plain sight. It’s a coordinated attack—social engineering plus technical weakness combined together. Your old defenses weren’t built to stop this kind of multi-method attack.

How Deepfakes Actually Target Developers?

Developers aren’t special. They’re vulnerable like everyone else. The difference is what attackers can do with that vulnerability.

Instead of traditional infrastructure attacks, deepfakes target the human element. That’s developers, project managers, anyone making decisions. One deepfake video call authorizes a transaction. One deepfake message approves malicious code. Malicious AI-generated code plants backdoors that sit undetected for months.

Example: Arup, an engineering firm everyone respects, lost $25 million to a deepfake video call. The deepfake mimicked their CFO perfectly. It combined technical sophistication with psychological manipulation. No firewall stopped it because the attack bypassed technology entirely.

North Korea did something similar. They used deepfake videos to break into American businesses. This wasn’t quick profit—it was a state-level operation targeting long-term infiltration. That’s a new level of threat.

What Makes Modern AI Attacks Different?

These aren’t your typical hacks. Modern AI attacks have characteristics that make them genuinely dangerous.

- Adaptive Intelligence: AI attacks learn from your defenses and adapt instantly. Your static security signatures? Useless. The attack evolves in real-time.

- Hyper-Personalization: Machine learning models analyze massive amounts of public data to create targeted campaigns. A deepfake call mentions specific project details, your team members’ names, and company processes. Everything feels authentic because it basically is.

- Multi-Modal Attacks: These combine voice, video, and text simultaneously—one deepfake voice call backed up by a “confirmation” email and a video message. Traditional verification breaks down because the attack targets multiple channels simultaneously.

- Supply Chain Poisoning: Attackers inject malware into AI tools, corrupt open-source models, and hide malicious code in development tools people trust. You think you’re using a legitimate tool. You’re actually compromised from the start.

The Technical Side of Deepfake Creation

You don’t need much to create convincing deepfakes anymore. That’s the problem.

Voice cloning takes 20 to 30 seconds of audio. That’s it. Pull audio from LinkedIn videos, podcasts, and recorded meetings. Thirty seconds and a voice clone is done.

Video deepfakes take about 45 minutes to create with free tools. Free tools. Not expensive software. Not specialized skills. A motivated person with a laptop can generate a convincing video of your CTO in less time than a lunch break.

The process is straightforward: gather audio and video from any public source. Feed this data into AI models that analyze facial expressions, voice tone, and gestures. Finally, the AI creates new content that looks and sounds completely real.

Why Detection Isn’t Your Savior

Detection technology exists. It works sometimes. But relying on it is dangerous.

Detection systems trained on earlier deepfakes struggle to catch newer ones. As AI improves at creating fakes, detection tools lag. It’s a constant race in which attackers’ technology advances faster than defenses can keep up. You end up always a step behind.

Real-time detection isn’t perfect either. Some systems accurately detect deepfake signals, but they’re often too slow. By the time an alert goes off, the damage might already be done. Other systems flag legitimate communications as suspicious. False positives wreck trust in the security system itself.

Generalization is the core issue: detection works on the specific deepfakes it was trained on. New techniques? New models? The system fails. You’re depending on yesterday’s solution for tomorrow’s threat.

The AI Code Problem

GitHub Copilot and similar AI coding tools revolutionized development. They also introduced new attack surfaces nobody anticipated.

The vulnerability stat is damning: 48% of AI code suggestions have security deficiencies. That’s not a small percentage. That’s nearly half.

The danger goes deeper than simple mistakes. AI models trained on public code repositories reproduce weak practices from compromised codebases. Old bugs get recycled into new applications. Past vulnerabilities come back as features in modern code.

Attackers exploit this. They inject poisoned training data into AI models. They embed backdoors that activate under specific conditions. They compromise development tools that developers think are safe. Malware hides in PyPI packages, masquerading as AI tools. Nobody suspects it because it’s hiding in plain sight.

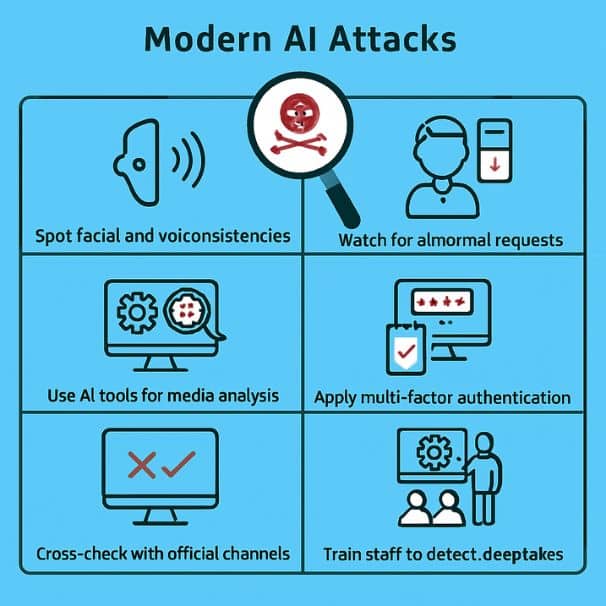

Real Defense Requires Real Change!

You can’t patch your way out of this. Traditional security doesn’t address deepfake threats. You need to change how your team operates.

1. Zero Trust Architecture

Verify everything. Every request, every communication, every code change. Doesn’t matter if it sounds like your CTO or looks legitimate. Verify independently before trusting.

2. Real Detection Systems

Tools like Reality Defender’s Real Suite (launched November 2025) monitor communications in real time and flag attempts to manipulate them. Their RealMeeting plugin detects impersonation in Zoom and Microsoft Teams calls. RealCall specializes in real-time voice deepfake detection for call centers. They’re not perfect, but they’re better than nothing.

3. Strong Multi-Factor Authentication

Beyond simple passwords and biometrics. Behavioral biometrics track typing speed, mouse movement patterns, and navigation habits. Anomalies get flagged. An attacker might clone someone’s voice, but they can’t clone someone’s actual behavior patterns.

4. Secured Development Environments

Sandbox development. Isolate environments. If malicious code gets in, containment prevents spread to production. Docker containers and isolated sandboxes limit damage.

Training Actually Works

Security awareness training needs to evolve past phishing awareness. People need to understand the specific threats posed by deepfakes.

Show real examples during training. Make the threat tangible. When developers see actual deepfake calls and understand how they work, they stay alert.

Transparent Verification Processes

Set up transparent verification processes for high-risk communication. Implement the “Two-Platform Rule”: verify critical decisions by using two different communication channels before proceeding.

- Example: If you receive an anonymous phone call asking you to deploy code, always confirm via email, Slack, SMS, or any other verification channel. If you get a video call, get phone-number confirmation and use different channels for better protection.

Build a culture where questioning suspicious contacts is normal, where verification isn’t paranoia, where security-consciousness is valued, not seen as an obstacle to work.

Real-Time Threat Detection

Address real-time attacks with real-time solutions.

Spectral analysis examines voice and video for unnatural patterns, impossible pitch contours, and repetitive artifacts produced by synthetic generation. Behavioral biometric monitoring tracks user actions. Contextual validation checks whether a message aligns with what typically happens in your business.

The rollout happens step by step:

- First, figure out what normal behavior looks like on your team.

- Next, bring in detection tools and train your security team to recognize new alert types.

- Finally, tweak the system to reduce false alarms and gradually expand monitoring to more communication channels.

Set up automated responses for high-confidence threats.

Code Security Enhancement

- Advanced static analysis tools are designed to spot the subtle patterns that reveal AI-generated weaknesses. Tools like Snyk Code (with DeepCode AI Fix) and GitHub CodeQL leverage machine learning to find vulnerabilities that older, traditional scanners might miss.

- Behavioral code review isn’t just about whether the code works—it’s about how it’s written. Reviewers are trained to recognize the unique traits of AI-generated code, ensuring that complex logic serves a real purpose and isn’t hiding a backdoor.

It’s also crucial to keep track of every dependency your software uses, including AI models and their training data, through software bills of materials (SBOMs). And don’t forget—hash verification and cryptographic checks are your last line of defense to ensure that third-party components haven’t been tampered with.

Incident Response Requires New Frameworks

Traditional cybersecurity frameworks aren’t built to handle deepfake attacks. You need special protocols to handle AI-driven threats. That means setting up clear steps to spot deepfake risks and assess their seriousness. Your security teams also need tools and training to quickly check suspicious messages and verify if synthetic media is involved.

Make sure you have clear escalation paths in place. If someone tries to impersonate an executive, senior leadership should be alerted right away. Vulnerabilities caused by AI-generated code should follow your usual disclosure process. Different types of attacks call for different responses.

It’s also essential to have communication plans ready—both inside your company and with the outside world—just in case a deepfake attack is confirmed. You’ll want to be honest and transparent while still protecting sensitive info and keeping stakeholder trust. The goal is to share enough to maintain confidence without giving attackers more ammunition.

Cross-Functional Collaboration Matters

Break down walls between development and security. DevSecOps practices need to incorporate AI threat intelligence.

- Legal teams need to understand how AI attacks impact regulatory compliance, evidence retention, and potential litigation. Deepfake incidents might require forensic preparation and reporting.

- Executive teams need guidance on addressing PR concerns and navigating deepfake attacks. Impersonating an executive can turn a PR issue into a disaster. Preparation prevents panic.

Future-Proofing Requires Agility

Security policies must adapt, learn, and understand new AI threats quickly. Rigid frameworks fail. Agile security policies can be adjusted without a complete infrastructure overhaul.

Invest in continuous learning systems that evolve to detect emerging threats. Integrate automated threat intelligence feeds providing early warnings on AI attack methods and tools. Build autonomic response mechanisms that automatically deploy countermeasures when new threats are identified.

Blockchain verification creates tamper-proof logs of communications and code updates. Post-quantum cryptography prepares for quantum computers breaking current encryption. Hardware-backed security, using trusted execution environments and hardware security modules, provides a strong defense against advanced AI attacks.

Final Remarks

Deepfakes and AI-driven attacks have changed the game in cybersecurity. Old-school defenses aren’t enough anymore. Static systems can’t keep up with threats that think, learn, and use psychological tricks alongside technical loopholes.

If you want to keep your organization safe, you need to pair strong technical tools with sharp human instincts. Fast, real-time detection matters. So does double-checking everything—especially when something feels off. Most importantly, create a culture where security checks feel routine—not a burden. This mindset is what keeps you ahead of the game.

At the heart of defending your development team is the understanding that AI threats target both technology and human psychology. Combining multiple layers of defense, constant monitoring, and flexible responses is the best way to stay protected against sophisticated attacks.

Our defenses must evolve as AI evolves. Companies will see this as a challenge to improve resilience and trustworthiness, not as something that prevents rapid development. The future of security isn’t preventing all attacks. It’s building systems and cultures that sense, respond, and recover from advanced threats with both speed and innovation.

The threats exist. The tools exist. The stakes are higher than ever. Companies adopting comprehensive AI-driven threat protection now will maintain a competitive edge and stakeholder confidence in an increasingly threatening digital world.

The time to act is now.